泉州网站建设选择讯呢南阳seo优化

目录

解压Hadoop

改名

更改配置文件

workers

hdfs-site.xml

core-site.xml

hadoop-env.sh

mapred-site.xml

yarn-site.xml

设置环境变量

启动集群

启动zk集群

启动journalnode服务

格式化hfds namenode

启动namenode

同步namenode信息

查看namenode节点状态

查看启动情况

关闭所有dfs有关的服务

格式化zk

启动dfs

启动yarn

查看resourcemanager节点状态

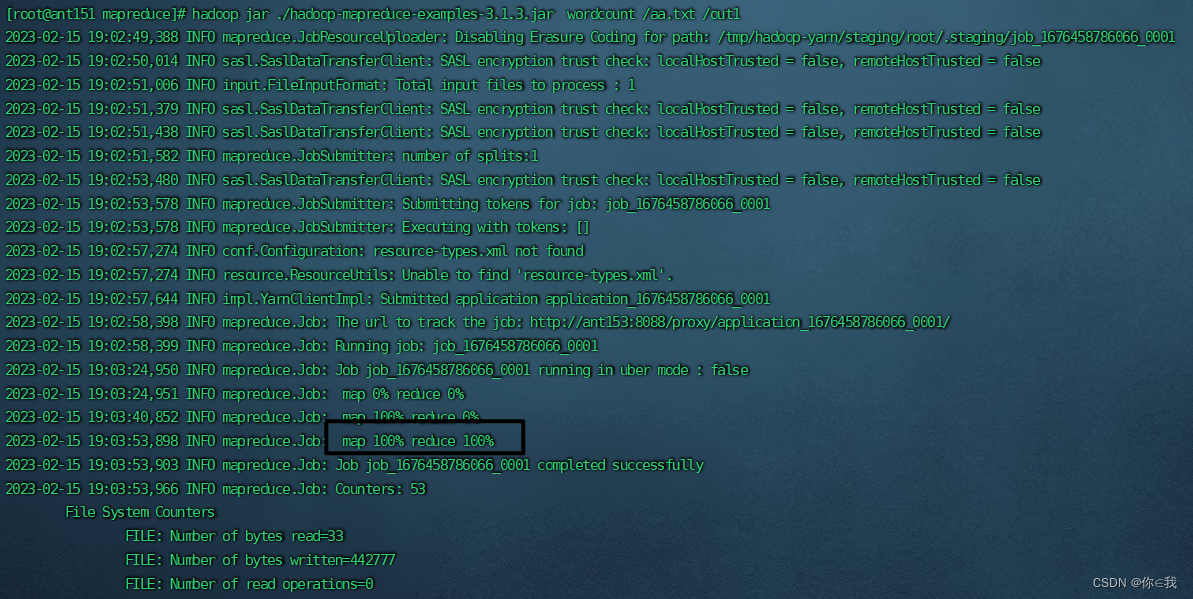

测试集群wordcount

创建一个TXT文件

上传到hdfs上面

查看输出结果

解压Hadoop

[root@ant51 install]# tar -zxvf ./hadoop-3.1.3.tar.gz -C ../soft/

改名

[root@ant151 install]# cd /opt/soft

[root@ant151 soft]# mv hadoop-3.1.3/ hadoop313

更改配置文件

workers

[root@ant151 ~] # cd /opt/soft/hadoop313/etc/hadoop

[root@ant151 hadoop] # vim workers

把所有的虚拟机加上去

hdfs-site.xml

[root@ant151 hadoop] # vim hdfs-site.xml

<configuration><property><name>dfs.replication</name><value>2</value><description>hadoop中每一个block文件的备份数量</description></property><property><name>dfs.namenode.name.dir</name><value>/opt/soft/hadoop313/data/dfs/name</value><description>namenode上存储hdfs名字空间元数据的目录</description></property><property><name>dfs.datanode.data.dir</name><value>/opt/soft/hadoop313/data/dfs/data</value><description>datanode上数据块的物理存储位置目录</description></property><property><name>dfs.namenode.secondary.http-address</name><value>ant151:9869</value><description></description></property><property><name>dfs.nameservices</name><value>gky</value><description>指定hdfs的nameservice,需要和core-site.xml中的保持一致</description></property><property><name>dfs.ha.namenodes.gky</name><value>nn1,nn2</value><description>gky为集群的逻辑名称,映射两个namenode逻辑名</description></property><property><name>dfs.namenode.rpc-address.gky.nn1</name><value>ant151:9000</value><description>namenode1的rpc通信地址</description></property>

<property><name>dfs.namenode.http-address.gky.nn1</name><value>ant151:9870</value><description>namenode1的http通信地址</description></property><property><name>dfs.namenode.rpc-address.gky.nn2</name><value>ant152:9000</value><description>namenode2的rpc通信地址</description></property>

<property><name>dfs.namenode.http-address.gky.nn2</name><value>ant152:9870</value><description>namenode2的http通信地址</description></property>

<property><name>dfs.namenode.shared.edits.dir</name><value>qjournal://ant151:8485;ant152:8485;ant153:8485/gky</value><description>指定namenode的edits元数据的共享存储位置(JournalNode列表)</description></property>

<property><name>dfs.journalnode.edits.dir</name><value>/opt/soft/hadoop313/data/journaldata</value><description>指定JournalNode在本地磁盘存放数据的位置</description></property>

<!-- 容错 -->

<property><name>dfs.ha.automatic-failover.enabled</name><value>true</value><description>开启NameNode故障自动切换</description></property>

<property><name>dfs.client.failover.proxy.provider.gky</name><value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value><description>如果失败后自动切换的实现的方式</description></property>

<property><name>dfs.ha.fencing.methods</name><value>sshfence</value><description>防止脑裂的处理</description></property>

<property><name>dfs.ha.fencing.ssh.private-key-files</name><value>/root/.ssh/id_rsa</value><description>使用sshfence隔离机制时,需要用ssh免密登陆</description></property><property><name>dfs.permissions.enabled</name><value>false</value><description>关闭hdfs操作的权限验证</description></property><property><name>dfs.image.transfer.bandwidthPerSec</name><value>1048576</value><description></description></property><property><name>dfs.block.scanner.volume.bytes.per.second</name><value>1048576</value><description></description></property></configuration>core-site.xml

[root@ant151 hadoop] # vim core-site.xml

<configuration><property><name>fs.defaultFS</name><value>hdfs://gky</value><description>逻辑名称,必须与hdfs-site.xml中的dfs.nameservice值保持一致</description></property><property><name>hadoop.tmp.dir</name><value>/opt/soft/hadoop313/tmpdata</value><description>namenode上本地的hadoop临时文件夹</description></property><property><name>hadoop.http.staticuser.user</name><value>root</value><description>默认用户</description></property><property><name>io.file.buffer.size</name><value>131072</value><description>读写队列缓存:128k;读写文件的buffer大小</description></property><property><name>hadoop.proxyuser.root.hosts</name><value>*</value><description>代理用户</description></property><property><name>hadoop.proxyuser.root.groups</name><value>*</value><description>代理用户组</description></property><property><name>ha.zookeeper.quorum</name><value>ant151:2181,ant152:2181,ant153:2181</value><description>高可用用户连接</description></property><property><name>ha.zookeeper.session-timeout.ms</name><value>10000</value><description>hadoop连接zookeeper会话的超时时长为10s</description></property>

</configuration>

hadoop-env.sh

[root@ant151 hadoop] # vim hadoop-env.sh

大概54行左右JAVA_HOME

export JAVA_HOME=/opt/soft/jdk180

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export HDFS_JOURNALNODE_USER=root

export HDFS_ZKFC_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

mapred-site.xml

[root@ant151 hadoop] # vim mapred-site.xml

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value><description>job执行框架:local,classic or yarn</description><final>true</final></property><property><name>mapreduce.application.classpath</name><value>/opt/soft/hadoop313/etc/hadoop:/opt/soft/hadoop313/share/hadoop/common/lib/*:/opt/soft/hadoop313/share/hadoop/common/*:/opt/soft/hadoop313/share/hadoop/hdfs/*:/opt/soft/hadoop313/share/hadoop/hdfs/lib/*:/opt/soft/hadoop313/share/hadoop/mapreduce/*:/opt/soft/hadoop313/share/hadoop/mapreduce/lib/*:/opt/soft/hadoop313/share/hadoop/yarn/*:/opt/soft/hadoop313/share/hadoop/yarn/lib/*</value></property><property><name>mapreduce.jobhistory.address</name><value>ant151:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>ant151:19888</value></property><property><name>mapreduce.map.memory.mb</name><value>1024</value><description>map阶段task工作内存</description></property><property><name>mapreduce.reduce.memory.mb</name><value>1024</value><description>reduce阶段task工作内存</description></property></configuration>

yarn-site.xml

[root@ant151 hadoop] # vim yarn-site.xml

<configuration><property><name>yarn.resourcemanager.ha.enabled</name><value>true</value><description>开启resourcemanager高可用</description></property><property><name>yarn.resourcemanager.cluster-id</name><value>yrcabc</value><description>指定yarn集群中的id</description></property><property><name>yarn.resourcemanager.ha.rm-ids</name><value>rm1</value><description>指定resourcemanager的名字</description></property><property><name>yarn.resourcemanager.hostname.rm1</name><value>ant153</value><description>设置rm1的名字</description></property><property><name>yarn.resourcemanager.webapp.address.rm1</name><value>ant153:8088</value><description></description></property> <property><name>yarn.resourcemanager.zk-address</name><value>ant151:2181,ant152:2181,ant153:2181</value><description>指定zk集群地址</description></property><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value><description>运行mapreduce程序必须配置的附属服务</description></property><property><name>yarn.nodemanager.local-dirs</name><value>/opt/soft/hadoop313/tmpdata/yarn/local</value><description>nodemanager本地存储目录</description></property><property><name>yarn.nodemanager.log-dirs</name><value>/opt/soft/hadoop313/tmpdata/yarn/log</value><description>nodemanager本地日志目录</description></property><property><name>yarn.nodemanager.resource.memory-mb</name><value>1024</value><description>resource进程的工作内存</description></property><property><name>yarn.nodemanager.resource.cpu-vcores</name><value>2</value><description>resource工作中所能使用机器的内核数</description></property><property><name>yarn.scheduler.minimum-allocation-mb</name><value>256</value><description></description></property><property><name>yarn.log-aggregation-enable</name><value>true</value><description></description></property><property><name>yarn.log-aggregation.retain-seconds</name><value>86400</value><description>日志保留多少秒</description></property><property><name>yarn.nodemanager.vmem-check-enabled</name><value>false</value><description></description></property><property><name>yarn.application.classpath</name><value>/opt/soft/hadoop313/etc/hadoop:/opt/soft/hadoop313/share/hadoop/common/lib/*:/opt/soft/hadoop313/share/hadoop/common/*:/opt/soft/hadoop313/share/hadoop/hdfs/*:/opt/soft/hadoop313/share/hadoop/hdfs/lib/*:/opt/soft/hadoop313/share/hadoop/mapreduce/*:/opt/soft/hadoop313/share/hadoop/mapreduce/lib/*:/opt/soft/hadoop313/share/hadoop/yarn/*:/opt/soft/hadoop313/share/hadoop/yarn/lib/*</value><description></description></property><property><name>yarn.nodemanager.env-whitelist</name><value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value><description></description></property>

</configuration>

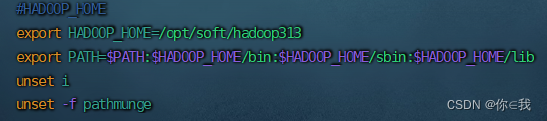

设置环境变量

[root@ant151 hadoop] # vim /etc/profile

#HADOOP_HOME

export HADOOP_HOME=/opt/soft/hadoop313

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/lib

配置完成之后把hadoop313和配置文件拷贝到其余机器上面

hadoop

[root@ant151 shell]# scp -r ./hadoop313/ root@ant152:/opt/soft/

[root@ant151 shell]# scp -r ./hadoop313/ root@ant153:/opt/soft/环境变量

[root@ant151 shell]# scp /etc/profile root@ant152:/etc

[root@ant151 shell]# scp /etc/profile root@ant153:/etc

所有机器刷新资源[root@ant151 shell]# source /etc/profile

启动集群

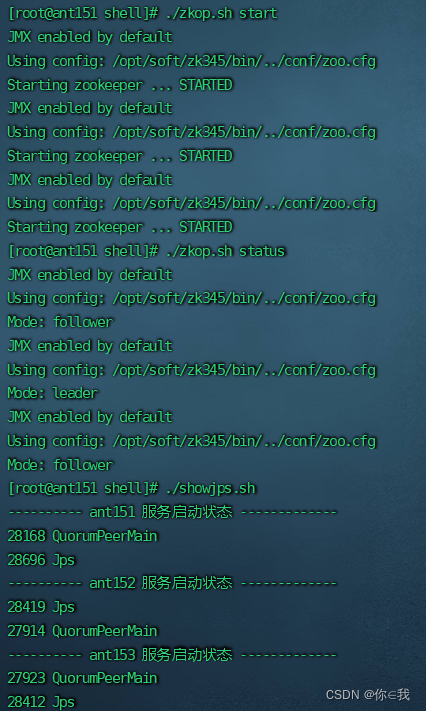

启动zk集群

[root@ant151 shell]# ./zkop.sh start

[root@ant151 shell]# ./zkop.sh status

[root@ant151 shell]# ./showjps.sh

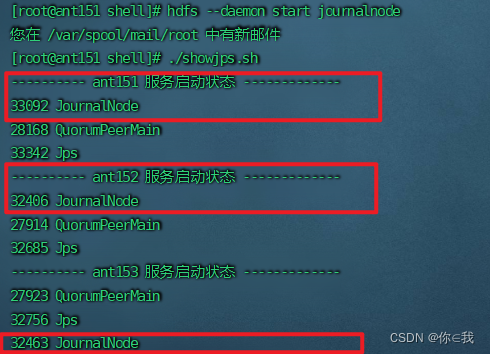

启动journalnode服务

启动ant151,ant152,ant153的journalnode服务

[root@ant151 soft]# hdfs --daemon start journalnode

格式化hfds namenode

在ant151上面操作

[root@ant151 soft]# hdfs namenode -format

启动namenode

在ant151上面操作

[root@ant151 soft]# hdfs --daemon start namenode

同步namenode信息

在ant152上操作

[root@ant152 soft]# hdfs namenode -bootstrapStandby

启动namenode

[root@ant152 soft]# hdfs --daemon start namenode

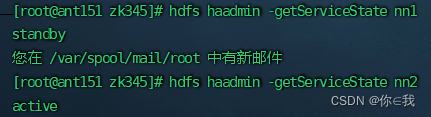

查看namenode节点状态

[root@ant151 zk345]# hdfs haadmin -getServiceState nn1

[root@ant151 zk345]# hdfs haadmin -getServiceState nn2

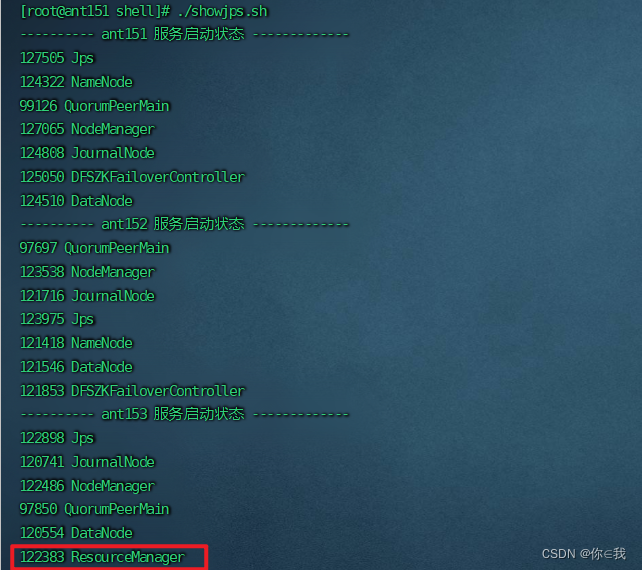

查看启动情况

[root@ant152 shell]# ./showjps.sh

关闭所有dfs有关的服务

[root@ant151 soft]# stop-dfs.sh

格式化zk

[root@ant151 soft]# hdfs zkfc -formatZK

启动dfs

[root@ant151 soft]# start-dfs.sh

启动yarn

[root@ant151 soft]# start-yarn.sh

查看resourcemanager节点状态

[root@ant151 zk345]# yarn rmadmin -getServiceState rm1

测试集群wordcount

创建一个TXT文件

[root@ant151 soft]# vim ./aa.txt

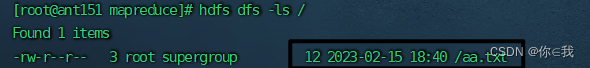

上传到hdfs上面

[root@ant151 soft]# hdfs dfs -put ./aa.txt /

查看

[root@ant151 soft]# hdfs dfs -ls /

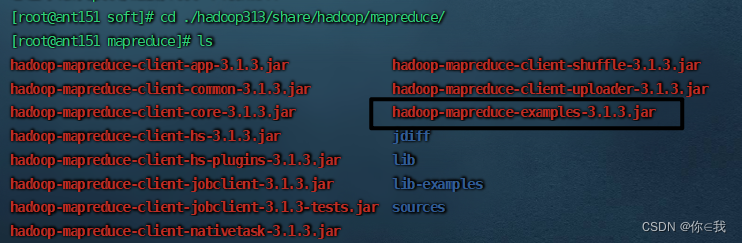

mapreduce里面的jar包运行wordcount

运行

[root@ant151 mapreduce]# hadoop jar ./hadoop-mapreduce-examples-3.1.3.jar wordcount /aa.txt /out1

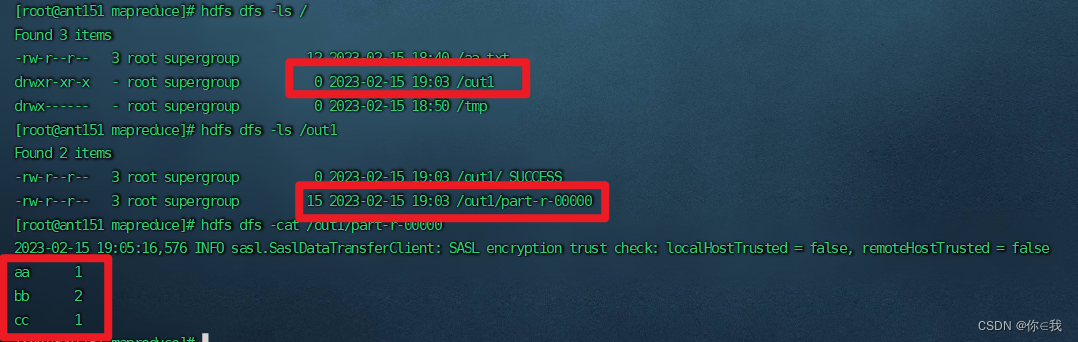

查看输出结果

[root@ant151 mapreduce]# hdfs dfs -ls /

[root@ant151 mapreduce]# hdfs dfs -ls /out1

[root@ant151 mapreduce]# hdfs dfs -cat /out1/part-r-00000出现

aa 1

bb 2

cc 3

则成功