建设网站运营收入国际域名查询网站

一、安装配置环境

1、准备工作

代码地址

GitHub - bubbliiiing/classification-pytorch: 这是各个主干网络分类模型的源码,可以用于训练自己的分类模型。

# 创建环境

conda create -n ptorch1_2_0 python=3.6

# 然后启动

conda install pytorch==1.2.0 torchvision==0.4.0 cudatoolkit=10.0 -c pytorch

pip install scipy==1.2.1 numpy==1.17.0 matplotlib==3.1.2 opencv_python==4.1.2.30 tqdm==4.60.0 Pillow==8.2.0 h5py==2.10.0

下载好后 他的那个数据集 按他那个配置,然后在项目根目录下运行

python txt_annotation.py 生成对应的 txt 文件

2、遇到的问题

1、

ImportError: TensorBoard logging requires TensorBoard with Python summary writer installed. This should be available in 1.14 or above.解决

pip install tensorboard2、

ModuleNotFoundError: No module named 'past'解决办法

pip install future3、

ImportError: libSM.so.6: cannot open shared object file: No such file or directory

# 和

ImportError: libXrender.so.1: cannot open shared object file: No such file or directory解决

apt-get install libsm6

apt-get install libxrender1二、debug 记录

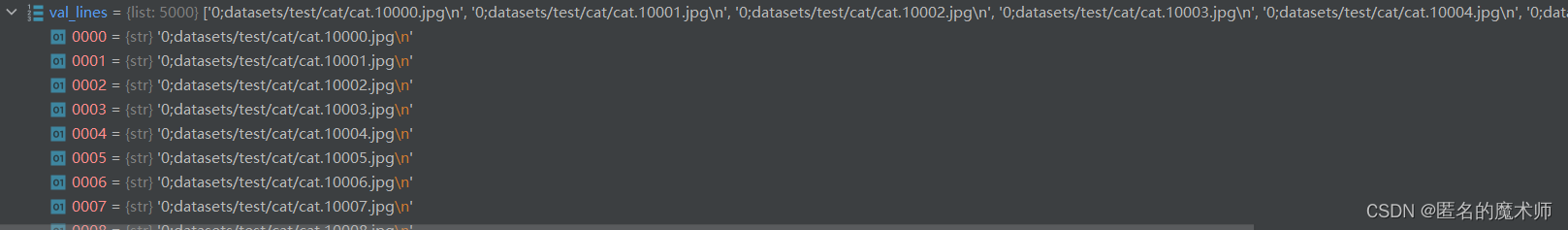

1、train_lines

val_lines

2、 show_config

----------------------------------------------------------------------

| keys | values|

----------------------------------------------------------------------

| num_classes | 2|

| backbone | mobilenetv2|

| model_path | |

| input_shape | [224, 224]|

| Init_Epoch | 0|

| Freeze_Epoch | 50|

| UnFreeze_Epoch | 200|

| Freeze_batch_size | 32|

| Unfreeze_batch_size | 32|

| Freeze_Train | True|

| Init_lr | 0.01|

| Min_lr | 0.0001|

| optimizer_type | sgd|

| momentum | 0.9|

| lr_decay_type | cos|

| save_period | 10|

| save_dir | logs|

| num_workers | 4|

| num_train | 20000|

| num_val | 5000|

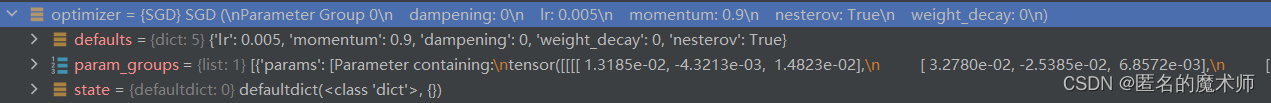

----------------------------------------------------------------------3、 optimizer

4、打印日志

Start Train

Epoch 1/200: 0%| | 0/625 [00:00<?, ?it/s<class 'dict'>]utils_fit.py --- 19

if local_rank == 0:print('Start Train')pbar = tqdm(total=epoch_step,desc=f'Epoch {epoch + 1}/{Epoch}',postfix=dict,mininterval=0.3)5、

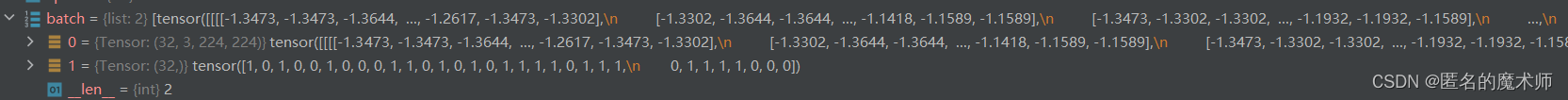

gen

batch

三、其它

1、打印模型 model, 这个应该是 backbone

MobileNetV2((features): Sequential((0): ConvBNReLU((0): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=32, bias=False)(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): Conv2d(32, 16, kernel_size=(1, 1), stride=(1, 1), bias=False)(2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(2): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(16, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(96, 96, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=96, bias=False)(1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(96, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(3): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(24, 144, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(144, 144, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=144, bias=False)(1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(144, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(4): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(24, 144, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(144, 144, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=144, bias=False)(1): BatchNorm2d(144, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(144, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(5): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(192, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=192, bias=False)(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(6): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(192, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=192, bias=False)(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(7): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(192, 192, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=192, bias=False)(1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(8): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(384, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(9): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(384, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(10): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(384, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(11): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(64, 384, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=384, bias=False)(1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(384, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(12): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(96, 576, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(576, 576, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=576, bias=False)(1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(576, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(13): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(96, 576, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(576, 576, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=576, bias=False)(1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(576, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(14): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(96, 576, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(576, 576, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=576, bias=False)(1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(576, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(15): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(960, 960, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=960, bias=False)(1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(16): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(960, 960, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=960, bias=False)(1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(17): InvertedResidual((conv): Sequential((0): ConvBNReLU((0): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(1): ConvBNReLU((0): Conv2d(960, 960, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=960, bias=False)(1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True))(2): Conv2d(960, 320, kernel_size=(1, 1), stride=(1, 1), bias=False)(3): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(18): ConvBNReLU((0): Conv2d(320, 1280, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(1280, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU6(inplace=True)))(classifier): Sequential((0): Dropout(p=0.2, inplace=False)(1): Linear(in_features=1280, out_features=2, bias=True))

)2、数据集导入与建立

train.py --- 384 397

train_dataset = DataGenerator(train_lines, input_shape, True)

val_dataset = DataGenerator(val_lines, input_shape, False)gen = DataLoader(train_dataset, shuffle=shuffle, batch_size=batch_size, num_workers=num_workers, pin_memory=True, drop_last=True, collate_fn=detection_collate, sampler=train_sampler)

gen_val = DataLoader(val_dataset, shuffle=shuffle, batch_size=batch_size, num_workers=num_workers, pin_memory=True,drop_last=True, collate_fn=detection_collate, sampler=val_sampler)3、 开始训练模型

train.py --- 404

for epoch in range(Init_Epoch, UnFreeze_Epoch):训练过程在 train.py --- 452

fit_one_epoch(model_train, model, loss_history, optimizer, epoch, epoch_step, epoch_step_val, gen, gen_val, UnFreeze_Epoch, Cuda, fp16, scaler, save_period, save_dir, local_rank)

4、调整学习率

train.py --- 450

set_optimizer_lr(optimizer, lr_scheduler_func, epoch)5、前向传播的入口

utils_fit.py --- 40

outputs = model_train(images)